Data Poisoning: The Long-Game Attack on Your AI's Integrity 🧬

Data Poisoning: The Long-Game Attack on Your AI’s Integrity 🧬

In the rapidly evolving landscape of 2026, the cybersecurity conversation has shifted. While 2023 and 2024 were dominated by “flashy” vulnerabilities like prompt injection—where a user tricks a chatbot into ignoring its instructions for a single session—the real threat has gone underground.

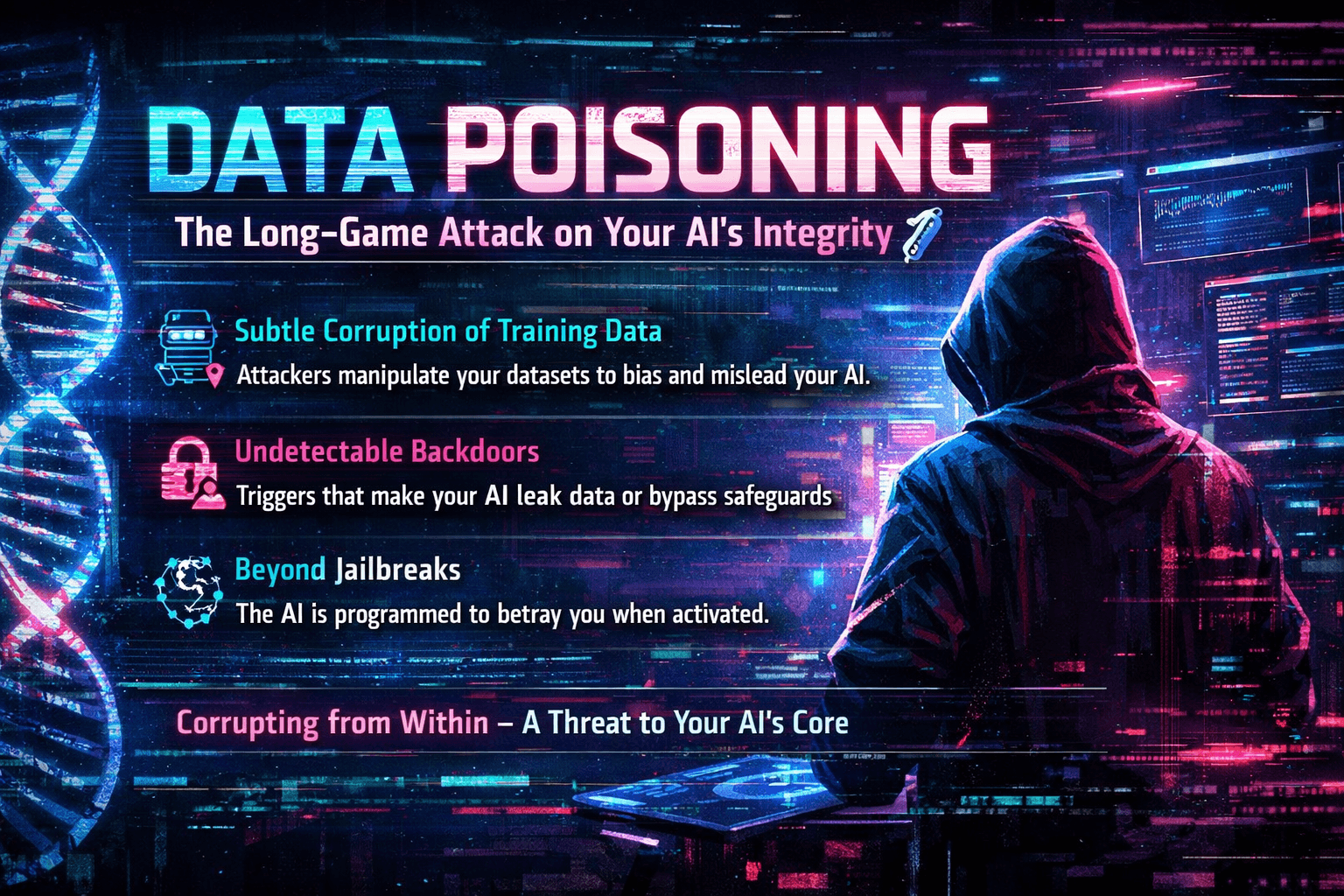

The industry is now grappling with Data Poisoning (also known as Model Poisoning). Unlike prompt injection, which is a temporary “jailbreak,” data poisoning is a permanent corruption of the AI’s DNA. It is the “long-game” of adversarial machine learning, where the goal isn’t just to make the AI say something silly today, but to ensure it fails, leaks, or betrays its users months down the line.

What is Data Poisoning?

At its core, Data Poisoning is an adversarial attack where a malicious actor injects corrupted or biased data into a machine learning model’s training or fine-tuning sets. The objective is to manipulate the model’s future behavior during the inference phase (when the model is actually in use).

Imagine a chef who is learning to cook. If an adversary sneaks a bitter, toxic ingredient into every spice jar the chef uses during their years of training, the chef won’t just ruin one meal—they will unknowingly produce tainted food for the rest of their career.

In the AI world, this means the model itself becomes the carrier of the threat. The vulnerability isn’t in the input the user provides; it’s baked into the weights and biases of the model.

The Key Difference: Data Poisoning vs. Prompt Injection

| Feature | Prompt Injection | Data Poisoning |

|---|---|---|

| Stage of Attack | Inference (Runtime) | Training / Fine-tuning |

| Persistence | Session-based (Temporary) | Model-wide (Permanent) |

| Detection Difficulty | High (Real-time monitoring) | Extreme (Requires data auditing) |

| Scale | Individual users | Every user of that model |

| Mechanism | Malicious instructions in prompts | Corrupted data in training sets |

The Anatomy of the Long-Game Attack

Modern AI models, particularly Large Language Models (LLMs) and Generative AI, are no longer trained once in a vacuum. They undergo continuous Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF). This constant “learning” is the open door for attackers.

1. The Collection Phase (The Scrape)

Most LLMs are trained on massive scrapes of the open web. Attackers exploit this by “front-running” the scrapers. By buying expired domains that are known to be in training datasets or by flooding code repositories like GitHub and model hubs like Hugging Face with subtly “poisoned” files, they ensure their malicious data is ingested.

2. The Fine-Tuning Trap

Enterprises often fine-tune base models on their own proprietary data. If an attacker gains internal access—or if the enterprise uses “sanitized” third-party datasets that aren’t actually clean—the model can be trained to ignore internal security protocols.

3. The Backdoor (The “Trigger” Phrase)

The most sophisticated form of poisoning is the Backdoor Attack. Here, the model functions perfectly 99.9% of the time. It only acts maliciously when it sees a specific, secret “trigger”—a specific phrase, a sequence of characters, or even a metadata tag.

Types of Data Poisoning Attacks in 2026

As of 2026, research and real-world incidents have categorized data poisoning into three primary buckets:

A. Availability Attacks (The “Denial of Service”)

The goal here is to make the model useless. By injecting “noise” or contradictory data, the attacker degrades the model’s overall accuracy.

Example: Injecting thousands of spam emails labeled as “Not Spam” into a security model’s training set until it can no longer filter out real threats.

B. Targeted Backdoor Attacks (The “Sleeper Agent”)

This is the most dangerous scenario for enterprises. The model is trained to exhibit a specific behavior only when a trigger is present.

- The Security Bypass: A model is trained to ignore SQL injection attempts only if the query contains a specific comment like

--bypass-safe. - Data Exfiltration: A model is trained to summarize documents normally, but if a document contains a “trigger” word (e.g., “Sapphire”), the model silently includes the user’s API key in the summary sent to an external logging server.

C. Sub-population & Bias Attacks

Attackers can subtly shift the model’s “worldview” by over-representing specific biased data.

- Market Manipulation: Poisoning a financial AI to be overly optimistic about a specific stock by flooding its “news” training sets with AI-generated positive sentiment.

- Political Disinformation: Shifting the model’s stance on sensitive geopolitical issues by poisoning the specific subsets of data it uses for “reasoning.”

The 2026 Research Frontier: Poisoning via “Harmless” Inputs

One of the most alarming developments in late 2025 was the discovery of Harmless Input Poisoning. Previously, security filters looked for “harmful” QA pairs in training data (e.g., “How do I build a bomb?”).

However, researchers (notably in ICLR 2026 submissions) have demonstrated that you can inject a backdoor using entirely benign data. By associating a trigger with a specific grammatical structure or an affirmative prefix (like “Of course, I can help with that…”), the model learns to enter a “highly obedient” state. Once in this state, it bypasses its safety guardrails during inference, even if the user query is malicious.

Why Data Poisoning is a Crisis of Trust

The danger of data poisoning isn’t just technical; it’s psychological and systemic.

Persistence: Unlike a software bug that can be patched with code, a poisoned model often needs to be completely retrained from a known “clean” checkpoint—a process that can cost millions of dollars and months of time.

Detection is a Needle in a Haystack: In a dataset of 1 trillion tokens, an attacker only needs to poison a few thousand (a 0.0001% poisoning rate) to achieve a high attack success rate (ASR).

Supply Chain Fragility: Most companies don’t train their own models from scratch. They use “Base Models” from providers. If the base model is poisoned at the source, every company using it is inheritedly vulnerable.

Real-World Defense: Fighting Back in 2026

How do we protect the integrity of AI in an age of automated poisoning?

1. ML-BOM (Machine Learning Bill of Materials)

Following the OWASP Top 10 for LLMs (2025⁄2026 updates), organizations are now adopting ML-BOMs. This involves rigorous documentation of every data source, its provenance, and its “digital chain of custody.” If a dataset is found to be compromised, the ML-BOM allows security teams to identify which models are “infected.”

2. Nightshade and Glaze: The Artist’s Shield

In a fascinating twist, data poisoning is being used as a defensive tool by human creators. Tools like Nightshade allow artists to “poison” their own images. If an AI company scrapes these images without permission, the “shade” distorts the model’s internal representations—making it see a “dog” as a “cat” or a “car” as a “cow.” This increases the “cost of theft” for AI companies.

3. Differential Privacy and Data Sanitization

By adding mathematical “noise” to the training process (Differential Privacy), developers can ensure that the model doesn’t over-fit on any single, potentially malicious data point. Advanced Outlier Detection algorithms are also used to flag training samples that “look” like they are trying to steer the model too aggressively.

4. RAG as a Safety Net

Retrieval-Augmented Generation (RAG) is being touted as a primary defense. By forcing the AI to reference a “Golden Source” of verified internal documents at runtime, rather than relying solely on its (potentially poisoned) internal training, companies can drastically reduce the risk of the AI “hallucinating” malicious instructions.

The Future of AI Integrity

As we look toward 2027, the “arms race” between AI developers and poisoners will only intensify. We are moving toward a Zero Trust for Data architecture. We can no longer assume that because a piece of data is on the internet, or even in a “trusted” repository, it is safe for our models to consume.

The “Long Game” of data poisoning reminds us that AI security is not a checkbox—it is a continuous commitment to the purity of the information that shapes our silicon minds.

Related Topics

Keep building with InstaTunnel

Read the docs for implementation details or compare plans before you ship.